Insurance companies have a lot of information about their policyholders, but just because they possess the information doesn’t mean they really know their policyholders.

Let’s be honest – insurers don’t have the ability to access all this data, and even if they did, they struggle to interpret what it all means. Why? Much of an insurance company’s data is siphoned off to different systems within the organization and cannot be leveraged for strategic business decision-making. In fact, according to Willis Towers Watson, less than 20% of the data that insurers have is being used for this purpose. have is being used for this purpose.

But what if life insurers used this data?

Knowledge is power, and the more insurers know about their policyholders, the more accurate and targeted they can be in their approach.

Let’s say a certain policyholder has been missing premium payments for the last few months, which could suggest that the customer is nearing lapsation. The finance department has this information, but the marketing and sales departments are likely kept in the dark with no access to these important records. Any other information about this particular policyholder, such as knowing he moved to a low-income neighborhood three months ago and that he is a mechanic, is not available to these departments in a clear or timely fashion.

If the organization had access to the whole story, it would be able to act preemptively and strategize regarding the value of a specific policyholder.

Should they attempt to contact the customer to prevent lapse? Should they attempt a cross-sale? Should they allow him to lapse his policy? How old is his policy? What is his premium? What makes more sense for the business?

The answers to all of these pressing insurance questions can be revealed by the data.

A buried treasure, just waiting to be discovered

Insurers are sitting on tons of data, but it doesn’t mean much if they can’t comprehend it, draw connections or gain insights.

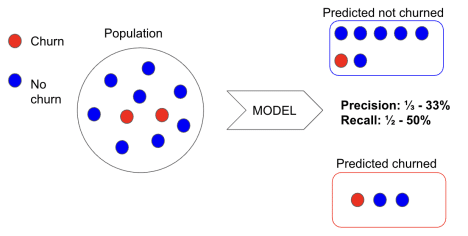

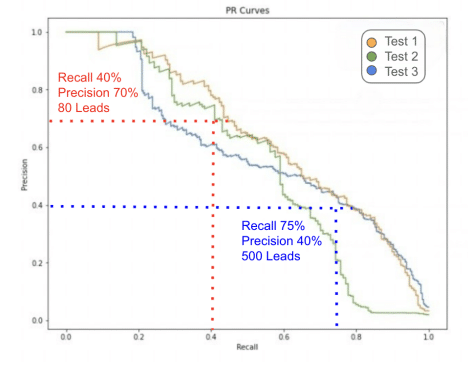

Atidot’s technology uses predictive analytics and AI to help insurance companies do just this. By augmenting customer data with open-source information, Atidot can predict insurance-specific behavior based on big data analysis and trends. The Atidot algorithm automatically scans all data resources and pinpoints relevant insights about policyholders that insurers can turn into business opportunities.

The solution enables insurance companies to know their customers better – and to know precisely which of its policyholders is likely to lapse, be underinsured, or more likely to be cross-sold. It enables insurers to employ a more customer-centric approach, thereby increasing customer satisfaction and loyalty.

With Atidot, insurance companies can uncover invisible value in their existing books of business. They’ve been sitting on a goldmine all this time.

min read

min read

min read

min read

min read

min read

min read

min read